SGU Episode 574

| This episode needs: transcription, proofreading, formatting, links, 'Today I Learned' list, categories, segment redirects. Please help out by contributing! |

How to Contribute |

| SGU Episode 574 |

|---|

| July 9th 2016 |

|

| (brief caption for the episode icon) |

| Skeptical Rogues |

| S: Steven Novella |

B: Bob Novella |

C: Cara Santa Maria |

J: Jay Novella |

E: Evan Bernstein |

| Quote of the Week |

The universe is probably littered with the one-planet graves of cultures which made the sensible economic decision that there's no good reason to go into space – each discovered, studied, and remembered by the ones who made the irrational decision. |

| Links |

| Download Podcast |

| Show Notes |

| Forum Discussion |

Introduction

You're listening to the Skeptics' Guide to the Universe, your escape to reality.

S: Hello, and welcome to The Skeptic's Guide to the Universe. Today is Wednesday, July 6th, 2016; and this is your host, Steven Novella. Joining me this week are Bob Novella,

Evan's Italy Vacation (0:26)

Forgotten Superheroes of Science (6:18)

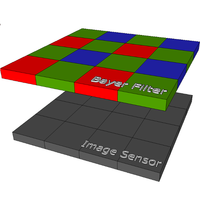

- Bryce Bayer

News Items

A Psychiatrist Falls for Exorcism (9:21)

S: All right, let's move on to some news items. You guys remember John Mac?

C: John Mac?

B: Yeah!

E: Oh yes! Sure.

S: Psychiatrist who came to the conclusion that some of his patients were actually abducted by aliens.

C: (Groaning) Oh no ...

S: Yeah. Well, we have another psychiatrist, Richard Gallagher, who might be worse. He's convinced that some of the cases that he's investigated, of people who might be mentally ill, are in fact possessed by demons.

E: Yeah

C: Uh huh ...

S: And he's a Yale-trained psychiatrist, so I guess we're even now with out ... yeah.

C: He's a Yale-trained psychiatrist ...

E: (Chuckling) Oh no!

C: from four hundred years ago?

(Steve and Cara laugh)

E: Steve, this is your domain, you're directly responsible ...

S: Yeah

E: for making sure this guy gets on ...

C: You work in a neighboring lab, you are just as culpable.

S: He's not working in ...

E: You guys practically share an office with the guy.

S: He's not currently working at Yale. He was just trained by somebody. Okay, so he wrote an editorial in the Washington Post, which a lot of our listeners obviously saw, and they sent it to us. It's like, true believer 101. He hits every true believer trope, or most of the true believer tropes that there are, meaning the ways of justifying belief in something that's not scientific.

He starts right out with, “I was inclined to skepticism,” right? The faux skepticism. “But I was convinced by the evidence.” But always, in cases like this, we have somebody defending a paranormal belief or whatever. The evidence is crap. And then it's a series of post-hoc excuses and rationalizations for why the evidence is crap, right? That's basically what it amounts to.

This is like a big game of Name That Logical Fallacy, right, reading this guy's article. He essentially said that he was asked to investigate – by the Catholic Church (he is a Catholic) – cases that they suspected might be genuine demonic possessions, to determine whether or not they were mentally ill, or maybe genuinely possessed. And he said the vast majority of them were diagnosable with some kind of mental illness. But there were these few cases where things happened that he couldn't explain.

E: And therefore ...

S: And therefore demons. Right. So he goes from his series of logical fallacies there. First, he makes the argument from personal incredulity, right? “I couldn't explain it! Therefore it's not explained.” Wrong. “Therefore, it's inexplicable, it's unexplainable.” Wrong. “Therefore, it's demons.” Wrong.

(Cara laughs)

S: So the three logical fallacies: Argument from personal credulity, confusing unexplained with unexplainable, and then the argument from ignorance. “We don't know what this is, therefore it's demons.” Yeah, we call that the true believer trifecta.

E: Yeah, or the – what do they call in the Catholic Church? The trinity! Yes.

(Cara laughs)

S: A trinity in logical fallacies? So here is the anecdotal evidence that he offers. That some of the allegedly possessed people that he investigated displayed hidden knowledge, like telling one of the present, “You have a secret sin.”

C: What?

E: What, now, wait. Way too specific! That is just ...

J: What did he mean, “You have a secret sin? Like a sin that they're not even aware of?”

C: Yeah.

S: No, that they're ...

C: No, a sin that they're not telling anyone.

S: Yeah

C: Like everyone has.

S: Like every human being on the planet. So that's ... he basically describes really basic cold reading techniques just as applied to a fake demonic possession. That's one of those falsely specific comments. It seems specific once you make the connection to you, but it really is sort of a vague thing that could apply to everybody.

All right, he says, here's some other evidence. This is a quote now,

“A possessed individual may suddenly in a type of trance voice statements of astonishing venom, and contempt for religion, while understanding and speaking various foreign languages previously unknown to them. The subject might also exhibit enormous strength or even the extraordinarily rare phenomenon of levitation.”

Then in parentheses:

“I have not witnessed a levitation myself, but half a dozen people I work with vow that they've seen it in the course of their exorcisms.”

B: Oh! That's all I need!

S: There's no video evidence of any of this of course.

E: Joe said his sister's cousin's third brother's cousin's husband's daughter once saw some one maybe levitating.

S: So these are all also completely standard, out of the book, demonic possession tricks, right? So the whole levitation thing is, you know, if people arch their back, especially if you arch your back violently, you could bounce off the bed. And then people call that levitation. There's never video of somebody floating three feet above the bed like in The Exorcist movie.

E: Right. That's why it's good to have a ...

B: Ghost Busters!

E: ... you can test it ...

S: Yeah

E: right away to see if some one's really levitating, just throw the hula hoop around them.

S: Right. And speaking various foreign languages, again, very typical type of claim that's made, but never verified. Never ever verified. So, the other question you have to ask is, “So, what exactly happened? Did they just utter a phrase of gibberish that somebody thought was a foreign language? Or did they say a phrase in a foreign language, but they weren't actually having a conversation in a foreign language?

You know, you could memorize a phrase of Latin, and throw that out there in the middle of your demonic possession act, you know.

E: (Demonic voice) E Pluribus Unum! Aragh!

(Cara laughs)

S: Exactly. That doesn't mean that they know the language.

C: Yeah

S: Yeah, so those are the kind of things where the story evolves over time, and you get this standard narrative, like the levitation and the speaking in foreign languages. “They said awful, mean, vicious things,” like that's evidence that they're possessed by a demon.

The thing is, I've actually watched dozens and dozens of hours of exorcisms on video tape. I've watched a lot on TV, and a lot that were private, that were made available to me, but they were not something that was aired on TV. They are boring as hell!

C: Yeah

S: Nothing happens.

J: It's not anything like the movies, guys. It's a lot of people just ...

E: Oh gosh!

J: kind of standing there, looking at some one who's acting completely normal.

(Cara laughs)

S: Or, int he cases where you have a mentally ill person who thinks that they're possessed by a demon, they're pretending to be possessed by a demon; and I've joked that I've seen better portrayals at live action role playing games. I mean, it's just so unconvincing! It's pathetic! You have to believe that the interesting stuff always happens off camera, and that whenever a camera's rolling, suddenly, you get these childish portrayals of what somebody thinks a demonic possession might look like based upon watching the movies.

Another thing, there was a 20-20 documentary where they were showing a real life exorcism, and the voice over is just ...

B: I know what you're gonna say!

S: Yeah, the voice over was comical! They were saying, “Oh, she displayed superhuman strength,” because that's part of the narrative. Meanwhile, there's literally two little old ladies holding the person down.

(Cara laughs, Evan chuckles)

S: And not seeming to struggle at all, just ... so it's just the narrative. They're just relaying the narrative despite the fact that the evidence does not support it whatsoever! You know?

B: Steve, I remember that scene. Jay Dillan could beat those two women up, that's how old and frail they were.

J: My three and a half year old son could beat the two women up that were holding down the superhuman strength of the possessed girl.

S: If this is all happening, why do we never catch it on camera, especially now with everyone with a cell phone.

J: Well, it's the same thing with UFO's Steve, I mean, there's ...

S: Right!

J: cameras in almost every person's hands today.

S: Here is his answer:

One cannot force these creatures to undergo lab studies, or submit to scientific manipulation. They will also hardly allow themselves to be easily recorded by video equipment, as skeptics sometimes demand. The official Catholic catechism holds that demons are sentient, and possess their own will. They are (as they are fallen angels), they are also craftier than humans. That's how they sew confusion, and seed doubt after all. Nor does the church wish to compromise its sufferer's privacy, nor do doctors want to compromise a patient's confidentiality.”

So, this is the whole “Aliens are more intelligent than us. Demons are craftier than us. The conspiracy theorists are smarter and have more resources. The Bigfoot ...”

E: Immune to science.

S: Bigfoot can teleport,” whatever. “Psychic ability doesn't function when there are skeptics in the room.” This is all ...

E: Special pleading.

S: Post hoc, yeah, special pleading. This is all special pleading.

B: Oh, Steve, you remember that quote, Steve? Those Satanists are so good that they left no evidence! So the lack of evidence was evidence that they ...

S: Yeah

B: are super ... whoa! What universe do you live in?

S: The exact quote was ...

J: So scary!

S: “Listen, these people are master Satanists! The lack of evidence proves that they did it!”

(Rogues laugh)

B: Oh my god!

J: Steve, you know what, that actually sounds like it came from the Simpsons.

S: I know! It's a self-parody! That's why. The confidentiality seems reasonable at first, but you know what? We film patients all the time! It's like, “We would like to film you in order to document your movement disorder,” or whatever, “for teaching purposes, for research, for documentation purposes.” You get them to sign a little thing, and you use it properly. You don't air it on the TV, but it's evidence.

C: Well, you would think that if somebody was possessed by a demon, they would be like, “Hell, yeah! Film this! I never want this to happen again! Learn what's going on for me.”

J: Yeah, exactly!

S: And if they're not in their right minds, you get some one else, whoever their health care power of attorney or whatever, their next of kin, to give permission if they're not of sound mind. So that's not really an excuse for why there's no video at all. I have to say one other thing: It's only tangentially related to this actual case. I blogged about this earlier in the week, and guess who showed up in the comment section?

J: Dr. Egnore.

S: Yes, Michael Egnore showed up.

E: Ooh, hey!

B: Oh my god!

S: Always a trip. If you want to read a stunning example of motivated reasoning (Rogues chuckle), read his post. He's completely ignoring all of the actual feedback that people are giving him, and he has his narrative. His narrative is that belief in demons is the same thing as belief in aliens, and that skeptics and scientists believe in aliens, but they don't believe in demons because they're materialists, their materialist bias, right?

So you could have faith in religion and God, and you believe in demons because that fits your world view, or you believe you're a materialist, and that's just your world view, and then you believe in aliens. And he really forces this analogy between atheist religion and his religion to an absurd degree.

So here, I have to give you a quote. He says, “You have original sin (global warming, homophobia, Islamophobia, overpopulation) sacraments,” - and his example of sacraments are, “abortion, gay marriage, and recycling.”

C: What?

(Rogues laugh)

B: Oh, wow.

C: What? One of these things is not like the other ...

S: “Redemption, which is multiculturalism, reducing your carbon footprint. And angels and demons (aliens).” So, (Cara laughs) right. So we recycle as part of our sacrament, apparently. You can see how he's shoehorning – he has his narrative, and the facts are just irrelevant. He'll just shoehorn them in. So I'm trying to explain to him the difference between speculating about something that is plausible, like the fact that there might be life elsewhere in the universe, versus inventing an unfalsifiable supernatural belief system that is not based on anything that we know.

So somebody said, “The analogy would be apt if you were a demon speculating about whether these other hells that you know about also had demons in them. That would be a better analogy to ...

B: Ha ha! Yeah, right.

S: humans speculating about whether these other Earth-like planets might also have life on them. Anyway, all logic is wasted on him. Seriously, it's like logic has completely left the building. He's got his narrative, and he's there to push it.

(Rogues laugh)

S: So, it's very entertaining though to read through, if you have twenty minutes to kill, and you want to read the comments to my blog, the blog post is “A Psychiatrist Falls For Exorcism.” We're up to a hundred comments on it. It's mostly people having fun with Michael Egnore.

C: By the way, Steve, why did you have to use that picture from The Exorcist that's going to haunt my dreams now? (Laughs)

S: I know, it's creepy!

(Bob laughs)

C: It's so scary!

B: I love it!

C: Ah, that movie scared the shit out of me when I was a kid!

J: Yeah, me too. In my mind, I was so scared of that movie.

C: Linda Blair looks extra gross in this picture.

B: I like it!

S: For its time, yeah, it was a genuinely scary movie, absolutely.

C: Oh yeah.

E: Based on a true exorcism.

S: All right, Cara ...

C: A true one ...

B: Your mother sells socks in Hell!

S: Sews socks that smell!

C: Oh yeah! Doesn't she speak Italian? (Italian accent) Me? Why do this to me?

E: Yeah, yeah, yeah. Mama mia.

S: No, really, that really solidified – it's again, it's one of those interplays between culture and the movies, where the movie's based on culture, but then it solidifies the culture.

E: Yeah

S: So that became the standard demonic possession. The levitating ...

E: Right!

S: everything. Speaking in other languages.

E: Close Encounters did the same for aliens.

C: Yeah

S: Exactly.

fMRI Validity (23:11)

S: All right, Cara, tell us about fMRI scans.

C: Yes! Okay, so we talk a lot on the show about functional magnetic resonance imaging studies (or fMRI studies), and how we often have to take the results with a grain of salt. Usually though, that's because the sample sizes of these kinds of studies are really small, mostly because it's really expensive to do them; and that allows for a whole lot of statistical fluctuation. But, a whole new problem was recently uncovered. And I think at this point we're not really talking a grain of salt, we're kind of talking the whole salt mine.

So to give a little bit of background: Researchers in Sweden and the UK decided that they wanted to test some of the underlying assumptions used in standard fMRI studies. Specifically, these studies rely on software. You do an fMRI, you do the scan, and you don't analyze the data point by point by hand. There's a software layer in between the scanner and you to comb through the overwhelming amount of data that that scanner produces.

And what these researchers found does not look good. If you guys remember, like I said (we've talked about fMRI a lot), the way that it works: You get in a – have any of you four been scanned before? If an fMRI? Not just MRI?

S: No, an MRI, not an fMRI.

C: So I did an fMRI maybe a year ago when I was working on a TV show on Al Jazeera America called Techno. And it was really intense! I was kind of scared at first. I remember, Steve, you joking with me that I was gonna freak out in the scanner! I was proud of myself that I did pretty well, but yeah, you basically lay on your back; they put you into this scanner that only really covers your head and your shoulders, but it's very close to you. It feels very restrictive. And it works because it surrounds your head with this magnetic field that's also combined with radio waves. It's very loud, there's all these knocking sounds.

And as opposed to an MRI, which is actually looking at this nuclear flip in your atoms, an fMRI is looking at the hemoglobin in your blood. It reacts differently under this field of magnets and radio waves if your blood is oxygen rich or if it's oxygen poor, enough so that you can see a contrast in the data, so they can roughly calculate which areas of the brain are “active,” - I'm saying that in quotes - “active” as compared to background levels.

S: Right

C: The problem is though that most of the analysis is, like I said, actually done by this software. And there are three packages that are commonly used, and specifically these researchers in Sweden and the UK wanted to look at these software packages. They're called, SPM, FSL, and AFNI. And these programs operate generally on two primary levels. So there are a couple of terms that we have to understand if you're gonna be reading about this at all, you'll keep running into these terms.

They divide the 3D space of the map to brain into these little units called “voxels.” So voxels is kind of like a pixel. We're comfortable with pixels in bitmap space. Voxels are like little functional units of the three-dimensional space of the map frame.

S: You know how many brain cells would be in on voxel?

C: Oh, I don't! How many?

S: About a million.

C: Holy shit!

S: Gives you an idea of the resolution.

C: And that's just one voxel, wow, yeah.

S: It's like a pixel, it's like a three dimensional pixel, yeah.

C: Yeah! And we think of them as so-o-o high-res, but your brain is so much more high-res! Um, okay, and second, they scan all of those tiny voxels to find areas of clustered activity, and that's when they can start saying that there's like, “function” happening in that area. Obviously, the researchers don't go through all of these voxels by hand. The software package forms these voxels and then scans all of the voxels and says, “Okay, all of these voxels that are very close together are all active; we're gonna call that a cluster. So you see these two different levels of analysis: The voxel analysis and the cluster analysis.

S: Can I say one other thing?

C: Yeah!

S: There's actually another layer in there that I think is worth pointing out.

C: Oh, okay. Yeah, yeah, for sure.

S: So, what it's doing is it's looking at the activity of each voxel, and statistically comparing that activity to the typical pattern that an active cluster of cells would have. So when a brain region is active, the oxygenation levels will dip because you're using up the oxygen. Then they'll increase as you increase blood flow. That peaks at about six seconds. Then it drops again. It goes a little bit below baseline, and then back to baseline. So it has a very specific shape to it.

And what it's doing is comparing the actual activity of each voxel to the ideal activity that represents an active region. And then it's making a statistical comparison to say how likely is it that that voxel is active?

C: Yeah, and that's ...

S: That's another statistical layer that actually is relevant to the study.

C: And that's based, of course, on previously collected data that was supposed to be used as these baselines.

S: Right, exactly.

C: So that each individual patient or subject is not just compared to themselves. They're compared to what is considered quote-unquote, “normal.” And so, yeah. What they decided to do is they took data from previous studies (and this is actually only recently become something that they're capable of doing, and this is something we'll get to a little bit later). But most fMRI data, especially in the past, was not made public. So it's very hard to replicate; it's very hard to dig into the data.

But more recently, I think, there has been kind of an open data movement within science, and so these researchers were able to take the data from previous studies that were made available on databases, and they looked specifically at four hundred ninety-nine different control subjects across studies.

So these were control subjects, meaning they shouldn't have had any organized brain behavior. You put one subject in the scanner, and you tell them to – I don't know – imagine a fluffy puppy dog? And then you put another subject in the scanner and you don't tell them to imagine a fluffy puppy dog. So they're looking at the control data. Anything picked up by the scanner should have been random. Obviously, when you're in there, you can't perfectly make your brain devoid of thought. So, maybe you're thinking about your to do list, maybe your arm twitches, of course you're gonna have activation in your brain when those things happen. But it should be super-random. You shouldn't see any sort of a theme going on within the control groups.

They took these four hundred ninety-nine subjects and arbitrarily broke the group up into individual groups of twenty, and they measured them against each other. A two million eight hundred eighty thousand random comparisons. So they did a lot of analyses here, close to three million analyses.

And what did they find? Well, they expected a false positive rate of five percent. This is based on standard models, this is pretty common in science, five percent false positives. But they found certain packages, the false positive rate was calculated as high as seventy percent. Seventy percent across already published studies.

S: Right

C: When analyzed. They also found larger problems in these clusterized comparisons than in voxel-comparisons. And one other thing that they found is, like, for example, they looked at two hundred forty-one recent fMRI studies, and they realized that some forty percent of those recent fMRI studies didn't correct for doing multiple comparisons. And we've talked about this a lot on the show.

S: Yeah

C: The more comparisons you do, the more chance you're gonna have of a higher error rate, and you have to correct for this. This was illustrated beautifully – I wasn't on the show yet, I wonder if you guys studied this. In 2009, a group out of UC Santa Barbara did an fMRI on a dead fish ...

S: Yeah, the dead salmon study.

C: Yeah. And they found activity, and that's because they did so many different correlations that they were able to get those false positives. They beautifully illustrate why you have to correct for that.

S: Yeah, but to be clear, they deliberately did it wrong ...

C: Yeah

S: and showed that you can manufacture a false positive.

C: Exactly.

S: When you do it correctly, it doesn't. And this is true of this study as well. Just to be clear, this is not an indictment of the technology of fMRI scans. This is an indictment of – it just shows that it can be abused or misused, or if you don't use proper rigorous methodology. And even the numbers that you're giving - I don't know if you're gonna get into this layer of detail – like, the seventy percent, these are all, like, you pick your parameters, you pick what statistical threshold to use, and how many subjects et cetera.

So if you use more rigorous methods, that false positive rate goes down. And also, you kind of, I think you touched upon this, but I do want to emphasize that they looked at two basic ways of analyzing the data, the voxel-wise inference ...

C: Yeah

S: and the cluster-wise inference. For the voxel-wise inference, it was fine.

C: Yeah

S: The actual ...

C: They found that it was pretty standard across the board.

S: And for cluster-wise, that's where the problem was. And it was very variable depending exactly on how they looked at the data. The seventy percent's kind of a representative figure, but it's like, the results were sort of all over the place depending on exactly what process you're using. How many subjects, what level of statistical significance you're choosing, et cetera.

But it does really though call into question the cluster-wise inference as a method, at least within the statistical packages.

C: For sure, because again, what they didn't do is they didn't go back and redo previously published studies. What they did is they took data ...

S: Yes

C: from previously published studies and did new analyses on it, and showed that if you do these analyses in a not-terribly rigorous way, and if you kind of just let the software make the decisions for you, and you don't go back in make the kinds of corrections that are necessary when you're doing fMRI research, you can come up with these incredibly inflated false positives.

S: Right

C: These inflated levels of significance. And there was another wrinkle, and this actually became the headline of a lot of the stories. To me, it was slightly less interesting, but also kind of worrisome, is that there was a bug in one of the packages. In the AFNI package, there is a software program called, “3D clust-STIM,” and that bug was sitting in that package for fifteen years. It was only corrected in May of 2015 while the manuscript was being prepared. And that reduced the size of the image search for clusters. So it underestimated correction, and it ended up again inflating significance.

So obviously, you can read the paper. Hey, what journal is the paper in? (Steve chuckles) Anyone want to guess?

S: P-NAS?

C: Yeah!

(Rogues laugh)

C: Always in P-NAS. So, you know, there's a lot of nuanced analyses. They get really into the statistics. You can read it for more details, but by the end of it, basically, in the discussion, the authors estimated that some forty-thousand studies published using fMRI data over the course of these ... when were fMRI's first introduced?

S: About twenty years.

C: In the nineties.

S: About twenty years.

C: Yeah, okay, so these twenty years could be either invalid, or could have some questions about their validity. And the worry is basically, we can't redo them all. A) A lot of the data's not publicly available, and (b), who's gonna do that? We've already seen that we're dealing with some replication issues in science, and this is I think a prime example of why it is so important that before you say, “Scientists know where your arm is gonna move before you even think about it.”

S: Or they can tell when you're lying, yeah.

C: Yeah, or they know ... exactly. All the different things that we read, it's a lot of pop psychology. We read out of these fMRI studies. “FMRI studies show that scientists know this about you, and that about you.” A lot of times we really do have to take that with a grain of salt, because (a), the sample sizes are too small, (b), they're often not replicated, and now we know (c), if we're not using the software correctly, and if the software hasn't been reinforced and validated by multiple different data analyses, we could be dealing with hugely inflated false positives.

S: Yeah

C: So we could be dealing with seeing activity that's not actually there.

S: Which doesn't surprise me at all, 'cause over the years, you see these really crazy fMRI studies. I mean, some are, they seem very rigorous, and very good. Others are like, “Really?”

C: Yeah

S: “That looks like total crap to me.” And this supports that view that it's very easy to generate false positives. This is just basically showing statistically rigorously what we kind of all knew from looking at a lot of these published studies. This reminds me a lot of the Simonson article, the p-hacking article ...

C: Yeah

S: where they say, “If you exploit these researcher degrees of freedom, you could generate false positive results sixty percent of the time to a five percent level.” This is very similar. Very similar numbers in fact, as well. So this is the fMRI version of p-hacking.

C: Yeah

S: We definitely need to know about this. This is excellent self-corrective method within science. And the authors said, “Yeah, we can't really go back and redo forty thousand studies, but going forward, this is really important to note.”

E: Yeah

C: Yeah, I'm hoping that this paper gets cited in the method section of almost every fMRI study moving forward. “Like, based on research by these Swedish and UK scientists, we realize that we needed to go through and ensure that our methods were valid!” Or have another layer of statistical rigor.

(Commercial at 36:25)

Juno at Jupiter (37:38)

First Driverless Car Fatality (45:18)

Who's That Noisy (51:39)

- Answer to last week: Rossi Whistle in the Caribbean Ocean

What's the Word (54:56)

- Paroxysmal

S: Well, Cara, it is time for What's the Word.

C: Ooh! What's the Word? The word this week is a fun one, and I know you like it, Steve.

J: Right, yes, great segue. I love this segment. So when we talk in each others' segments, it's even funner. You what this word? (Cara laughs) The word of the week, Steve, is delicious apple. (Apple crunching sound) (Jay moans while chewing)

C: Back to the core!

E: Is it red delicious?

C: The word this week is a really fun one. I know, Steve, that you love this word. I read this word all the time. And the most recent time that I came across it was just two nights ago, when I was reading the next chapter in Oliver Sach's Hallucinations, which I'm finally getting around to read. And so the word is paroxysmal. Paroxysmal.

S: Or parox-ysmal.

C: So it's spelled P-A-R-O-X-Y-S-M-A-L, and it's actually an adjective from the noun, paroxysm. So, in medicine a paroxysm is a sudden fit, or an attack, or an increase of disease symptoms, like convulsions, coughing, maybe pain. And they often recur. In a literary sense, we may hear it being used to describe emotion, like a paroxysm of emotion would be a violent outburst.

I read this all the time, 'cause I read a lot of clinical and medical non-fiction. Steve, how often do you use this word in practice?

S: All the time.

C: All the time.

S: Yeah, (Laughs) I love it! And it's such a mouth full (laughs). It's such a non rolling off the tongue word, but it's one of those hand full of words we talk about on the show, where I read it all the time, and I've never really needed to say it out loud until now. And that's when it always hits you. Like, how the freak do you pronounce this? I love it though.

It comes from the Greek paroxynene, or parox-y-nene, meaning to stimulate or exasperate. It's a joining of the two root words are para, meaning beyond; and the Greek oxynene, which means to sharpen. That comes from the root word oxy, meaning sharp. That's also the root in the word oxygen. Its first documented usage in English was from the fifteenth century. But prior to that, it was translated into French and medieval Latin before that dates back to the thirteenth century. It always has had the medical meaning, and only within the last century or two did we start to see it used in a more literary sense.

S: That's one of those words that's just part of my vocabulary. Like, I would lose total sense of whether or not people understood what that word means.

C: Yeah

S: 'Cause we use that all the time, because, you know, the time course of symptoms in illness is critical to the diagnosis. And so we have lots of terms that we use to describe how things change over time, like relapsing, remitting, et cetera. Paroxysmal is just one of those words that we use. Yeah, it's cool.

C: Yeah

Your Questions and E-mails

Question #1: Jet Fuel (57:47)

Science or Fiction (1:01:04)

(Science or Fiction music)

It's time for Science or Fiction

Skeptical Quote of the Week (1:20:00)

S: And until next week, this is your Skeptic's Guide to the Universe.

S: The Skeptics' Guide to the Universe is produced by SGU Productions, dedicated to promoting science and critical thinking. For more information on this and other episodes, please visit our website at theskepticsguide.org, where you will find the show notes as well as links to our blogs, videos, online forum, and other content. You can send us feedback or questions to info@theskepticsguide.org. Also, please consider supporting the SGU by visiting the store page on our website, where you will find merchandise, premium content, and subscription information. Our listeners are what make SGU possible.

Today I Learned:

References

|