SGU Episode 898

| This episode was transcribed by the Google Web Speech API Demonstration (or another automatic method) and therefore will require careful proof-reading. |

| This transcript is not finished. Please help us finish it! Add a Transcribing template to the top of this transcript before you start so that we don't duplicate your efforts. |

Template:Editing required (w/links) You can use this outline to help structure the transcription. Click "Edit" above to begin.

| SGU Episode 898 |

|---|

| September 24th 2022 |

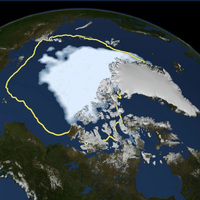

2012 Arctic sea ice minimum. Outline shows average minimum 1979-2010.[1] |

| Skeptical Rogues |

| S: Steven Novella |

B: Bob Novella |

C: Cara Santa Maria |

J: Jay Novella |

E: Evan Bernstein |

| Guest |

DA: David Almeda (sp?), SGU Patron |

| Quote of the Week |

In the field of thinking, the whole history of science – from geocentrism to the Copernican revolution, from the false absolutes of Aristotle's physics to the relativity of Galileo's principle of inertia and to Einstein's theory of relativity – shows that it has taken centuries to liberate us from the systematic errors, from the illusions caused by the immediate point of view as opposed to "decentered" systematic thinking. |

– Jean Piaget, Swiss psychologist |

| Links |

| Download Podcast |

| Show Notes |

| Forum Discussion |

Introduction, Guest Rogue

Voice-over: You're listening to the Skeptics' Guide to the Universe, your escape to reality.

[00:12.880 --> 00:17.560] Today is Tuesday, September 20th, 2022, and this is your host, Stephen Novella.

[00:17.560 --> 00:18.960] Joining me this week are Bob Novella.

[00:18.960 --> 00:19.960] Hey, everybody.

[00:19.960 --> 00:20.960] Kara Santamaria.

[00:20.960 --> 00:21.960] Howdy.

[00:21.960 --> 00:22.960] Jay Novella.

[00:22.960 --> 00:23.960] Hey, guys.

[00:23.960 --> 00:24.960] Evan Bernstein.

[00:24.960 --> 00:26.760] Good evening, everyone.

[00:26.760 --> 00:30.400] And we have a guest rogue this week, David Almeida.

[00:30.400 --> 00:32.120] David, welcome to the Skeptics' Guide.

[00:32.120 --> 00:33.120] Hi, guys.

[00:33.120 --> 00:34.120] Thank you for having me.

[00:34.120 --> 00:40.440] So, David, you are a patron of the SGU, and you've been a loyal supporter for a while,

[00:40.440 --> 00:45.200] so we invited you on the show to join us and have some fun.

[00:45.200 --> 00:46.200] Tell us what you do.

[00:46.200 --> 00:47.840] Give us a little bit about your background.

[00:47.840 --> 00:48.840] I'm an electrician.

[00:48.840 --> 00:52.640] That doesn't sound very exciting relative to what you guys are all doing.

[00:52.640 --> 00:55.080] No, electricians are cool, man.

[00:55.080 --> 00:56.080] Yeah, they're shocking.

[00:56.080 --> 00:58.120] Oh, it starts.

[00:58.120 --> 01:05.640] I actually heard about your show kind of because of work, because when I was starting out as

[01:05.640 --> 01:09.920] an apprentice, pretty much all the work I did was really boring and repetitive, and

[01:09.920 --> 01:13.520] so I was kind of losing my mind a little bit.

[01:13.520 --> 01:17.080] And a friend of mine was at my house helping me work on the house, and he was playing your

[01:17.080 --> 01:20.400] guys' podcast, and I had no idea what a podcast even was.

[01:20.400 --> 01:22.200] This was like 2012, I think.

[01:22.200 --> 01:24.400] I thought he was like listening to NPR or something.

[01:24.400 --> 01:26.320] You were late to the game.

[01:26.320 --> 01:27.320] Yeah, I know.

[01:27.320 --> 01:28.320] I know.

[01:28.320 --> 01:32.320] And so anyways, he was listening to your show, and I asked him what it was, and he told me,

[01:32.320 --> 01:35.400] and then I ended up going back and listening to your whole back catalog while I was doing

[01:35.400 --> 01:41.320] horrible, very repetitive work, and it got me through that for the first couple years

[01:41.320 --> 01:42.320] of my apprenticeship.

[01:42.320 --> 01:43.320] Yeah, we hear that a lot.

[01:43.320 --> 01:49.520] It's good for when you're exercising, doing mind-numbing repetitive tasks, riding a bike

[01:49.520 --> 01:50.520] or whatever.

[01:50.520 --> 01:51.520] It's good.

[01:51.520 --> 01:53.840] You're not just going to sit there staring off into space listening to the SGU.

[01:53.840 --> 01:58.520] I guess some people might do that, but it's always good for when you're doing something

[01:58.520 --> 01:59.520] else.

[01:59.520 --> 02:00.520] Well, great.

[02:00.520 --> 02:01.520] Thanks for joining us on the show.

[02:01.520 --> 02:02.520] It should be a lot of fun.

News Items

S:

B:

C:

J:

E:

(laughs) (laughter) (applause) [inaudible]

2022 Ig Nobels (2:08)

[02:02.520 --> 02:05.640] You're going to have a news item to talk about a little bit later, but first we're just going

[02:05.640 --> 02:07.960] to dive right into some news items.

[02:07.960 --> 02:12.760] Jay, you're going to start us off by talking about this year's Ig Nobel Prizes.

[02:12.760 --> 02:14.160] Yeah, this was interesting.

[02:14.160 --> 02:22.840] This year, I didn't find anything about people getting razzed so much as I found stuff that

[02:22.840 --> 02:26.520] was basically legitimate, just weird, if you know what I mean.

[02:26.520 --> 02:28.200] Yeah, but that's kind of the Ig Nobles.

[02:28.200 --> 02:29.200] They're legit, but weird.

[02:29.200 --> 02:32.880] They're not fake science or bad science.

[02:32.880 --> 02:37.880] The Ig Nobel Prize is an honor about achievements that first make people laugh and then make

[02:37.880 --> 02:39.720] them think.

[02:39.720 --> 02:44.720] That's kind of their tagline, and it was started in 1991.

[02:44.720 --> 02:47.600] Here's a list of the 2022 winners.

[02:47.600 --> 02:48.600] Check this one out.

[02:48.600 --> 02:53.520] The first one here, the Art History Prize, they call it a multidisciplinary approach

[02:53.520 --> 02:57.600] to ritual enema scenes on ancient Maya pottery.

[02:57.600 --> 03:01.960] Whoa, I want to see those.

[03:01.960 --> 03:04.560] Talk about an insane premise.

[03:04.560 --> 03:11.680] Back in the 6-900 CE timeframe, the Mayans depicted people getting enemas on their pottery,

[03:11.680 --> 03:13.640] which that's crazy.

[03:13.640 --> 03:18.520] This is because they administered enemas back then for medicinal purposes.

[03:18.520 --> 03:20.200] So it was part of their culture.

[03:20.200 --> 03:24.200] And the researchers think it's likely that the Mayans also gave enemas that had drugs

[03:24.200 --> 03:28.000] in them to make people get high during rituals, which is-

[03:28.000 --> 03:29.000] That works, by the way.

[03:29.000 --> 03:30.000] Yeah.

[03:30.000 --> 03:33.000] So one of the lead researchers tried it.

[03:33.000 --> 03:39.960] He gave himself an alcohol enema situation, and they were giving him breathalyzer, and

[03:39.960 --> 03:43.340] lo and behold, he absorbed alcohol through his rectum.

[03:43.340 --> 03:46.120] He didn't need to do that to know that that happens.

[03:46.120 --> 03:47.120] This is science.

[03:47.120 --> 03:48.120] We already know that that happens.

[03:48.120 --> 03:49.120] What's the problem, Kara?

[03:49.120 --> 03:50.120] Science?

[03:50.120 --> 03:51.120] Hello?

[03:51.120 --> 03:52.120] Lit review.

[03:52.120 --> 03:53.120] Lit review.

[03:53.120 --> 04:01.160] The guy also tested DMT, but apparently the dose was probably not high enough for him

[04:01.160 --> 04:03.280] to feel anything because he didn't feel anything.

[04:03.280 --> 04:04.840] So I think that's pretty interesting.

[04:04.840 --> 04:09.760] I mean, that's very, very provocative, just to think that the Mayans, I didn't know they

[04:09.760 --> 04:11.160] did that, and then it was like a thing.

[04:11.160 --> 04:13.480] I was like, whatever.

[04:13.480 --> 04:16.080] Next one, Applied Cardiology Prize.

[04:16.080 --> 04:18.940] This one I find to be really cool.

[04:18.940 --> 04:24.700] So the researchers, they were seeking and finding evidence that when new romantic partners

[04:24.700 --> 04:29.880] meet for the first time and feel attracted to each other, their heart rates synchronize.

[04:29.880 --> 04:31.840] That was the premise of their research.

[04:31.840 --> 04:35.640] So the researchers wanted to find out if there is something physiological behind the gut

[04:35.640 --> 04:42.240] feeling that people can and do feel when they have met the quote unquote right person.

[04:42.240 --> 04:44.560] I don't know about you guys, but I've felt this.

[04:44.560 --> 04:49.440] I felt an inexplicable physiological thing.

[04:49.440 --> 04:53.440] I just didn't realize that something profound was happening.

[04:53.440 --> 04:55.960] So let me give you a quick idea of what they did.

[04:55.960 --> 04:58.080] They had 140 test subjects.

[04:58.080 --> 05:03.060] They monitored test subjects as they met other test subjects one on one.

[05:03.060 --> 05:09.280] If the pair got a gut feeling about the other person, the researchers predicted that they

[05:09.280 --> 05:15.660] would have motor movements, you know, act certain types of activity with their eyes,

[05:15.660 --> 05:18.440] heart rate, skin conductance.

[05:18.440 --> 05:22.020] These types of things would synchronize or they would pair each other or mirror each

[05:22.020 --> 05:23.020] other.

[05:23.020 --> 05:27.820] So 17 percent of the test subjects had what they considered to be successful pairing with

[05:27.820 --> 05:28.820] another subject.

[05:28.820 --> 05:33.760] And they found that couples heart rates and skin conductance correlated to a mutual attraction

[05:33.760 --> 05:35.060] with each other.

[05:35.060 --> 05:41.680] So there is some type of thing happening physiologically when two people, you know, get that feeling

[05:41.680 --> 05:44.520] when they're, you know, there's an initial attraction.

[05:44.520 --> 05:50.040] And it doesn't surprise me because, you know, as mammals, attraction is, you know, it's

[05:50.040 --> 05:51.480] a huge thing.

[05:51.480 --> 05:55.800] It's really it's not only important, but it, you know, your body is reacting to it.

[05:55.800 --> 06:00.180] There are things that happen when you feel that your body is is changing in a way.

[06:00.180 --> 06:01.180] Very cool.

[06:01.180 --> 06:04.960] The next one, I think a lot of people will get a kick out of the it's the literature

[06:04.960 --> 06:11.400] prize and they are analyzing what makes legal documents unnecessarily difficult to understand.

[06:11.400 --> 06:14.280] The basic idea here was there's two camps.

[06:14.280 --> 06:21.040] There's a camp that thinks that legal documents need to be as complicated as they are because

[06:21.040 --> 06:26.180] there's technical concepts and they need to be precise and they use they use this type

[06:26.180 --> 06:28.720] of language to help get that precision.

[06:28.720 --> 06:33.880] And there are other experts that think that laws are actually built upon, you know, mundane

[06:33.880 --> 06:38.360] concepts like cause consent and having best interests.

[06:38.360 --> 06:42.100] And what the researchers wanted to do was they wanted to test the two positions against

[06:42.100 --> 06:43.100] each other.

[06:43.100 --> 06:47.600] And essentially what they found, which is which is not going to surprise anyone, that

[06:47.600 --> 06:53.840] legal documents are at their core, essentially difficult to understand, which is what they

[06:53.840 --> 06:55.320] basically started with that premise.

[06:55.320 --> 07:00.640] But they proved it and they found out exactly what parts of the of the actual legal documents

[07:00.640 --> 07:02.080] were difficult to understand.

[07:02.080 --> 07:07.380] And they called it something called center embedding, which is when lawyers use legal

[07:07.380 --> 07:11.920] jargon within what they call convoluted syntax.

[07:11.920 --> 07:16.420] So in essence, I think what they're saying here is that that legal documents are difficult

[07:16.420 --> 07:21.800] essentially by design, that it's deliberate, which I find interesting and frustrating.

[07:21.800 --> 07:26.680] If you've ever had to read any type of legal documentation, it's kind of annoying how difficult

[07:26.680 --> 07:27.680] it is.

[07:27.680 --> 07:30.860] You have to reread it over and over and over again and look things up and really like sink

[07:30.860 --> 07:32.000] into it to understand.

[07:32.000 --> 07:34.320] So I understand why they did the research.

[07:34.320 --> 07:37.500] I just don't see what the benefit is to the result of their research.

[07:37.500 --> 07:38.720] Maybe they have to iterate it.

[07:38.720 --> 07:41.920] The center embedding thing is actually pretty interesting.

[07:41.920 --> 07:48.120] I saw an example of it and it's like not like it's not what I thought it was going to be.

[07:48.120 --> 07:52.760] It gives an example of like a sentence like a man loves a woman and then a man that a

[07:52.760 --> 07:57.880] woman that a child knows loves a man that a woman that a child that a bird saw is giving

[07:57.880 --> 08:03.840] these examples of like how they do the legal jargon with all those like with all those

[08:03.840 --> 08:08.520] little like phrases within phrases.

[08:08.520 --> 08:12.120] And it's like grammatically correct, but it's like impossible to follow once you have like

[08:12.120 --> 08:13.440] more than one of those.

[08:13.440 --> 08:16.480] The longest one they had here was a man that a woman that a child that a bird that I heard

[08:16.480 --> 08:18.680] saw knows loves.

[08:18.680 --> 08:23.720] I don't know how that actually makes grammatical sense, but apparently it does.

[08:23.720 --> 08:26.960] It makes no sense to me even though I read it like five times in my head.

[08:26.960 --> 08:29.000] I don't know what that sentence means.

[08:29.000 --> 08:33.500] Have you guys seen I just started watching that show Maid.

[08:33.500 --> 08:35.000] Have you guys seen that show on Netflix?

[08:35.000 --> 08:36.000] It's so good.

[08:36.000 --> 08:37.840] Oh, highly recommend.

[08:37.840 --> 08:41.660] But they do this funny thing where like she has to go to a court hearing and they kind

[08:41.660 --> 08:45.600] of show what she hears instead of what is being said.

[08:45.600 --> 08:51.680] And so she's at this custody hearing and the judge is talking to one of the prosecutors

[08:51.680 --> 08:56.620] and like mid-sentence they start going, and then legal, legal, legal, legal, so that you

[08:56.620 --> 08:57.720] can legal, legal.

[08:57.720 --> 08:59.520] I'll legal, legal after you legal.

[08:59.520 --> 09:02.520] And she like looked so confused because it's all she hears.

[09:02.520 --> 09:06.680] And they do it on the forms too when she looks at the forms like the words move and they

[09:06.680 --> 09:08.960] start to just say like legal, legal, legal, legal.

[09:08.960 --> 09:13.360] It's a great representation of exactly what you're talking about.

[09:13.360 --> 09:14.360] Totally.

[09:14.360 --> 09:17.360] The Charlie Brown teacher.

[09:17.360 --> 09:18.360] Charlie Brown.

[09:18.360 --> 09:19.360] Yeah.

[09:19.360 --> 09:20.360] Totally.

[09:20.360 --> 09:22.080] Let me get through a few more really quick.

[09:22.080 --> 09:26.720] There was a biology prize where they studied whether and how constipation affects the mating

[09:26.720 --> 09:28.360] prospects of scorpions.

[09:28.360 --> 09:36.540] There was a medical prize for showing that when patients undergo some form of toxic chemotherapy,

[09:36.540 --> 09:41.720] they suffer fewer harmful side effects when ice cream replaces ice chips.

[09:41.720 --> 09:42.720] Okay.

[09:42.720 --> 09:43.720] Reasonable to me.

[09:43.720 --> 09:44.720] Reasonable.

[09:44.720 --> 09:45.720] Unactionable.

[09:45.720 --> 09:46.720] Yep, it is.

[09:46.720 --> 09:47.720] And it's legit.

[09:47.720 --> 09:48.720] It is actually legit.

[09:48.720 --> 09:53.080] The ice cream, giving chemo patients ice cream helped them a lot more deal with side effects

[09:53.080 --> 09:55.280] than ice chips.

[09:55.280 --> 09:59.300] Their engineering prize, they're trying to discover the most efficient way for people

[09:59.300 --> 10:04.400] to use their fingers when turning a knob, doorknob.

[10:04.400 --> 10:09.920] That's you know, so they study people turning doorknobs and figured it out.

[10:09.920 --> 10:14.040] Works for trying to understand how ducklings manage to swim in formation.

[10:14.040 --> 10:20.520] Now this is cool because we know that fish and birds have very few rules of interaction

[10:20.520 --> 10:26.640] in order to do profound feats of being able to stay in these giant groups.

[10:26.640 --> 10:27.640] They could swim near each other.

[10:27.640 --> 10:31.600] They can fly near each other and they don't really need to have a complicated algorithm

[10:31.600 --> 10:32.600] happening.

[10:32.600 --> 10:34.560] They just have to follow a few simple rules and it works.

[10:34.560 --> 10:36.240] And apparently ducks can do it too.

[10:36.240 --> 10:40.720] There was a peace prize and this one is for developing an algorithm to help gossipers

[10:40.720 --> 10:43.800] decide when to tell the truth and when to lie.

[10:43.800 --> 10:44.800] Very important.

[10:44.800 --> 10:45.800] Right?

[10:45.800 --> 10:47.200] That's just wacky as hell.

[10:47.200 --> 10:52.740] The economics prize for explaining mathematically why success most often goes not to the most

[10:52.740 --> 10:55.460] talented people but instead to the luckiest.

[10:55.460 --> 10:56.460] That one was interesting.

[10:56.460 --> 10:57.840] I recommend you read that.

[10:57.840 --> 11:04.400] And then this last one here is safety engineering prize for developing a moose crash test dummy.

[11:04.400 --> 11:05.400] That's smart actually.

[11:05.400 --> 11:06.400] Yeah.

[11:06.400 --> 11:09.480] So you know lots of people hit these animals with their cars.

[11:09.480 --> 11:12.800] I heard you hesitate because you didn't know if you're supposed to say moose or mooses.

[11:12.800 --> 11:13.800] Meeses.

[11:13.800 --> 11:14.800] I don't know.

[11:14.800 --> 11:15.800] You were like hit these animals.

[11:15.800 --> 11:16.800] Animals.

[11:16.800 --> 11:17.800] Yeah.

[11:17.800 --> 11:18.800] I'm not going to.

[11:18.800 --> 11:19.800] What the heck?

[11:19.800 --> 11:20.800] What's the plural of moose?

[11:20.800 --> 11:21.800] Isn't it moose?

[11:21.800 --> 11:22.800] Yeah.

[11:22.800 --> 11:25.720] I think the plural of moose is moose.

[11:25.720 --> 11:27.640] I just add a K in when I don't know what to do.

[11:27.640 --> 11:29.520] Have I told you the story about the mongoose?

[11:29.520 --> 11:30.520] No.

[11:30.520 --> 11:31.520] What?

[11:31.520 --> 11:32.520] I heard.

[11:32.520 --> 11:33.520] I learned this.

[11:33.520 --> 11:43.760] I heard this in my film class where a director needed two mongoose for a scene and he couldn't

[11:43.760 --> 11:46.640] figure out what the plural of mongoose was.

[11:46.640 --> 11:49.780] You know the mongooses, mongies, whatever, he couldn't figure it out.

[11:49.780 --> 11:56.120] So he wrote in his message to the person who had to do this, I need you to get me one mongoose

[11:56.120 --> 11:59.600] and while you're at it get me another one.

[11:59.600 --> 12:04.160] Yeah, technically solves that problem.

[12:04.160 --> 12:05.160] All right.

[12:05.160 --> 12:06.160] Thanks Jay.

It's OK to Ask for Help (12:06)

[12:06.160 --> 12:08.520] Kara, is it okay to ask for help when you need it?

[12:08.520 --> 12:09.920] Is it okay?

[12:09.920 --> 12:13.400] Not only is it okay, it's great.

[12:13.400 --> 12:19.480] Let's dive into a cool study that was recently published in Psychological Science.

[12:19.480 --> 12:23.680] This study has a lot of moving parts so I'm not going to get into all of them but I have

[12:23.680 --> 12:29.520] to say just kind of at the top that I'm loving the thoroughness and I'm loving the clarity

[12:29.520 --> 12:32.320] of the writing of this research article.

[12:32.320 --> 12:37.040] I feel like it's a great example of good psychological science.

[12:37.040 --> 12:39.440] It's based on a really deep literature review.

[12:39.440 --> 12:44.120] A lot of people that we know and love like Dunning are cited who have co-written with

[12:44.120 --> 12:45.480] some of the authors.

[12:45.480 --> 12:56.720] This study basically is asking the question, why do people struggle to ask for help?

[12:56.720 --> 13:01.960] When people do ask for help, what is the outcome usually?

[13:01.960 --> 13:08.800] They did six, I think it was six or was it eight, I think it was six different individual

[13:08.800 --> 13:12.000] experiments within this larger study.

[13:12.000 --> 13:18.000] Their total and the number of people overall that were involved, that participated in the

[13:18.000 --> 13:21.780] study was like over 2,000.

[13:21.780 --> 13:25.180] They kind of looked at it from multiple perspectives.

[13:25.180 --> 13:30.340] They said, first we're going to ask people to imagine a scenario and tell us what they

[13:30.340 --> 13:34.640] think they would do or what they think the other person would feel or think.

[13:34.640 --> 13:39.200] Then we're going to ask them about experiences that they've actually had, like think back

[13:39.200 --> 13:43.220] to a time when you asked for help or when somebody asked you for help and then answer

[13:43.220 --> 13:44.400] all these questions.

[13:44.400 --> 13:50.280] Then they actually did a more real world kind of ecological study where they said, okay,

[13:50.280 --> 13:52.920] we're going to put a scenario in place.

[13:52.920 --> 13:59.120] Basically this scenario was in a public park, they asked people to basically go up to somebody

[13:59.120 --> 14:02.700] else and be like, hey, do you mind taking a picture for me?

[14:02.700 --> 14:10.200] They did a bunch of different really clean study designs where they took a portion of

[14:10.200 --> 14:14.280] the people and had them be the askers and a portion of the people and have them be the

[14:14.280 --> 14:17.920] non-askers and then a portion of the people and have them ask with a prompt, without a

[14:17.920 --> 14:18.920] prompt.

[14:18.920 --> 14:22.920] The study designs are pretty clean but they're kind of complex.

[14:22.920 --> 14:26.760] What do you guys think, I mean obviously you can't answer based on every single study,

[14:26.760 --> 14:31.360] but the main sort of takeaway of this was?

[14:31.360 --> 14:34.040] Asking for help is good and people are willing to give the help.

[14:34.040 --> 14:35.040] People like getting help.

[14:35.040 --> 14:36.040] Right.

[14:36.040 --> 14:37.040] Yeah.

[14:37.040 --> 14:40.440] So not only do people more often than not, and not even more often than not, like almost

[14:40.440 --> 14:46.200] all the time, especially in these low hanging fruit scenarios, do the thing that's asked

[14:46.200 --> 14:50.200] of them, but they actually feel good about it after.

[14:50.200 --> 14:54.240] They feel better having given help.

[14:54.240 --> 14:58.980] And so what they wanted to look at were some of these kind of cognitive biases basically.

[14:58.980 --> 15:04.560] They asked themselves, why are people so hesitant to ask for help?

[15:04.560 --> 15:13.120] And they believe it's because people miscalibrate their expectations about other people's prosociality,

[15:13.120 --> 15:17.640] that there's sort of a Western ideal that says people are only looking out for their

[15:17.640 --> 15:21.600] own interest and they'd rather not help anybody and only help themselves.

[15:21.600 --> 15:28.200] They also talk about something called compliance motivation.

[15:28.200 --> 15:34.160] So they think that people are, when they actually do help you out, it's less because they want

[15:34.160 --> 15:36.400] to because they're prosocial.

[15:36.400 --> 15:41.100] And it's more literally because they feel like they have to, like they feel a pull to

[15:41.100 --> 15:43.560] comply with a request.

[15:43.560 --> 15:49.000] But it turns out that in these different studies where either they're looking at a real world

[15:49.000 --> 15:54.920] example, they're asking people for imagined examples, the helpers more often than not

[15:54.920 --> 15:57.360] want to help and say that they feel good about helping.

[15:57.360 --> 16:01.760] But the people who need the help more often than not judge the helpers to not want to

[16:01.760 --> 16:06.120] help them and worry that the helpers won't want to help them.

[16:06.120 --> 16:11.200] So this is another example of kind of, do you guys remember last week, I think it was,

[16:11.200 --> 16:15.400] when I talked about a study where people were trying to calibrate how much they should talk

[16:15.400 --> 16:16.400] to be likable?

[16:16.400 --> 16:17.400] Yeah.

[16:17.400 --> 16:18.400] Yeah, so yeah.

[16:18.400 --> 16:20.120] And they were, again, miscalibrating.

[16:20.120 --> 16:22.000] They were saying, I shouldn't talk that much.

[16:22.000 --> 16:23.240] They'll like me more if I talk less.

[16:23.240 --> 16:26.360] But it turns out if you talk more, people actually like you more.

[16:26.360 --> 16:31.480] And so it's another one of those examples of a cognitive bias getting in the way of

[16:31.480 --> 16:37.080] us engaging in social behavior and actually kind of shooting ourselves in the foot because

[16:37.080 --> 16:42.580] we fear an outcome that is basically the opposite of the outcome that we'll get.

[16:42.580 --> 16:46.800] If we ask for help, we'll more than likely get it, and more than likely, the person who

[16:46.800 --> 16:50.280] helped us will feel good about having helped us, and it's really a win-win.

[16:50.280 --> 16:52.040] Of course, they caveated at the end.

[16:52.040 --> 16:53.480] We're talking about low-hanging fruit.

[16:53.480 --> 16:58.120] We're not talking about massive power differentials, you know, where one person, where there's

[16:58.120 --> 17:00.000] coercion and things like that.

[17:00.000 --> 17:05.040] But given some of those caveats to the side, basically an outcome of every single design

[17:05.040 --> 17:07.600] that they did in the study was people want to help.

[17:07.600 --> 17:09.660] And they want to help because it makes them feel good.

[17:09.660 --> 17:13.240] So maybe the next time you need help, if you ask, you shall receive.

[17:13.240 --> 17:15.400] Yeah, also, it works both ways, too.

[17:15.400 --> 17:20.520] People often don't offer help because they are afraid that they don't understand the

[17:20.520 --> 17:26.560] situation and they're basically afraid of committing a social faux pas.

[17:26.560 --> 17:32.560] And so they end up not offering help, even in a situation when they probably should,

[17:32.560 --> 17:33.560] you know, because the fear...

[17:33.560 --> 17:34.560] Like a good Samaritan?

[17:34.560 --> 17:38.840] Well, because people want to help and they want to offer to help, but they're more afraid

[17:38.840 --> 17:41.400] of doing something socially awkward, and so they don't.

[17:41.400 --> 17:47.040] So if you just don't worry about that and just offer to help, have a much lower threshold

[17:47.040 --> 17:49.120] for offering, it's like it's no big deal.

[17:49.120 --> 17:53.400] If it's like, oh, I'm fine, okay, just checking, you know, but people will not do it.

[17:53.400 --> 17:58.080] I was once walking down the street and there was a guy on the sidewalk who couldn't get

[17:58.080 --> 17:59.080] up.

[17:59.080 --> 18:00.640] He clearly could not get up on his own, right?

[18:00.640 --> 18:04.600] And there are people walking by and other people sort of like checking him out, but

[18:04.600 --> 18:06.600] nobody was saying anything or offering to help.

[18:06.600 --> 18:08.560] So I just, hey, you need a hand?

[18:08.560 --> 18:09.560] And he did.

[18:09.560 --> 18:13.080] And then like three or four people right next to him were like, oh, let me help you, you

[18:13.080 --> 18:14.160] know what I mean?

[18:14.160 --> 18:18.320] But it was just, again, it's not that they weren't bad people, they just were paralyzed

[18:18.320 --> 18:21.200] by fear of social faux pas.

[18:21.200 --> 18:24.720] So it's kind of, it's the reverse of, I guess, of what you're saying, you know, where people

[18:24.720 --> 18:27.760] might not ask for help because they're afraid that it's not socially not the right thing

[18:27.760 --> 18:28.760] to do.

[18:28.760 --> 18:29.760] But it is.

[18:29.760 --> 18:30.760] Give help, ask for help.

[18:30.760 --> 18:31.760] It's all good.

[18:31.760 --> 18:32.760] Everybody likes it.

[18:32.760 --> 18:34.720] Just don't let your social fears get in the way.

[18:34.720 --> 18:35.880] Don't let your social fears get in the way.

[18:35.880 --> 18:41.360] And also there are ways to buffer if you are scared that you're like putting somebody out,

[18:41.360 --> 18:44.160] is there are different like strategies that you can use.

[18:44.160 --> 18:45.840] You can give people outs.

[18:45.840 --> 18:50.200] You know, if you really do need help, but you also really are worried that you're going

[18:50.200 --> 18:52.880] to be putting somebody out by asking for help.

[18:52.880 --> 18:58.620] You can say things like, I'd really appreciate your help in this situation, but I also understand

[18:58.620 --> 19:02.120] that it may be too much for you and don't worry, I'll still get it taken care of.

[19:02.120 --> 19:08.360] Like there are ways to buffer and to negotiate the sociality of that so that you don't feel

[19:08.360 --> 19:10.200] like you're being coercive.

[19:10.200 --> 19:14.560] And so, yeah, it's like you see it all the time.

[19:14.560 --> 19:16.920] People who get stuff in life ask for it.

[19:16.920 --> 19:22.400] Yeah, or you could, you could do, or you could do with my, what my Italian mother does and

[19:22.400 --> 19:24.280] say, don't worry about me.

[19:24.280 --> 19:25.280] I'll be fine.

[19:25.280 --> 19:28.080] I don't need anything.

[19:28.080 --> 19:29.080] The passive guilt.

[19:29.080 --> 19:30.080] They are.

[19:30.080 --> 19:31.080] They're wonderful at that.

[19:31.080 --> 19:34.800] Don't forget guys, don't forget.

[19:34.800 --> 19:41.480] If you help somebody, they owe you someday.

[19:41.480 --> 19:44.080] You might do a favor for me, you know?

[19:44.080 --> 19:45.080] That's right.

[19:45.080 --> 19:49.600] One of those, one of those things that my dad like drilled into my head that I like

[19:49.600 --> 19:53.000] always hear in my head all the time was he would always say, you don't ask, you don't

[19:53.000 --> 19:54.000] get.

[19:54.000 --> 19:57.280] So like I literally hear that in my head all the time when I'm in scenarios where I want

[19:57.280 --> 20:03.240] to ask for something and I totally do get that way sometimes where I'm like, yeah.

Bitcoin and Fedimints (20:03)

[20:03.240 --> 20:05.240] Let me ask you something, David.

[20:05.240 --> 20:12.800] I have a feeling that fediments are not as tasty as they sound.

[20:12.800 --> 20:15.840] Tell us about Bitcoin and fediments.

[20:15.840 --> 20:16.840] Fediments.

[20:16.840 --> 20:21.520] So a fediments, it's a portmanteau, I just realized I don't think I've ever said that

[20:21.520 --> 20:22.520] word out loud.

[20:22.520 --> 20:23.520] You said it right though.

[20:23.520 --> 20:24.520] Welcome to the show.

[20:24.520 --> 20:28.680] That's one of those words I've probably read like a million times.

[20:28.680 --> 20:31.820] I don't think I've ever said it in conversation or ever.

[20:31.820 --> 20:40.480] So a fediments, a portmanteau of a federated Chowmian mint, which basically it's a way

[20:40.480 --> 20:43.960] to scale Bitcoin.

[20:43.960 --> 20:54.780] There's two big problems with Bitcoin scaling to achieve its goal of being a worldwide payment

[20:54.780 --> 20:59.120] settlement system that's decentralized.

[20:59.120 --> 21:03.240] So one of those is that it doesn't provide very good privacy.

[21:03.240 --> 21:11.280] And the other one is that custody is kind of a sticky issue.

[21:11.280 --> 21:17.800] You've got people on two extremes when it comes to custody of Bitcoin.

[21:17.800 --> 21:24.760] You've got people who are kind of the old school Bitcoiners who will say, not your keys,

[21:24.760 --> 21:30.640] not your Bitcoin, which basically they're 100% self custody.

[21:30.640 --> 21:34.480] If you're not self custodying your Bitcoin, then you're not doing it right.

[21:34.480 --> 21:39.000] But there are problems with self custody, which is basically if you theoretically had

[21:39.000 --> 21:44.480] your life savings in Bitcoin and you lost the key to your Bitcoin, then you lose all

[21:44.480 --> 21:45.840] your life savings.

[21:45.840 --> 21:47.640] It's completely irretrievable.

[21:47.640 --> 21:49.920] There's no way to get back.

[21:49.920 --> 21:54.480] So the other option is third party custody, which the most common form is like people

[21:54.480 --> 21:59.860] will have it on Coinbase or Binance and they hold the keys for you.

[21:59.860 --> 22:02.360] All you need to have is your username and password.

[22:02.360 --> 22:07.400] And even if you lose that, you could prove your identity to them and they could restore

[22:07.400 --> 22:08.400] that for you.

[22:08.400 --> 22:10.540] They could restore your account for you.

[22:10.540 --> 22:15.840] The main problem with that is that you're hackable.

[22:15.840 --> 22:18.600] It's hackable.

[22:18.600 --> 22:25.320] It could be part of, you got a lot of rug pools, especially in the past, you had a lot

[22:25.320 --> 22:30.680] of situations where somebody created some third party custody things simply to get people

[22:30.680 --> 22:37.480] to put their Bitcoin on there and then they just like walked away with it.

[22:37.480 --> 22:44.660] Fedimint is an idea that they call second party custody.

[22:44.660 --> 22:51.640] The basic idea of a fediment is where a small group of people could come together, they

[22:51.640 --> 23:00.320] could create this fediment and it would have some of the advantages of self-custody where

[23:00.320 --> 23:04.240] there would be no one point of failure where one person could get up and walk away with

[23:04.240 --> 23:11.000] everything and so that also gets rid of the risk of hacking as well because you would

[23:11.000 --> 23:14.920] need, well, it doesn't get rid of the risk, but it minimizes the risk of a hack because

[23:14.920 --> 23:21.200] you would need consensus of a group of people that say you had five people who were, they

[23:21.200 --> 23:26.280] call them the guardians of the fediment, you would need three out of those five people

[23:26.280 --> 23:28.680] to do anything for anything to happen to the Bitcoin.

[23:28.680 --> 23:33.840] So three out of those five people would have to all get hacked by the same person in order

[23:33.840 --> 23:38.120] for somebody to be able to walk away with the Bitcoin.

[23:38.120 --> 23:43.600] It's resistant to the third party custody problems of being hacked or somebody just

[23:43.600 --> 23:52.280] pulling the rug under you and the other problem it can help alleviate is the privacy problem

[23:52.280 --> 24:01.240] which is not really achievable on the main blockchain of Bitcoin, on the base level.

[24:01.240 --> 24:05.440] If you're like a really technically savvy person you can do a lot of extra steps to

[24:05.440 --> 24:13.640] make your transactions somewhat private, but it's not anything that even a moderately technically

[24:13.640 --> 24:18.280] savvy person could do and there's a lot of steps that you could mess up where it would

[24:18.280 --> 24:19.280] fail.

[24:19.280 --> 24:25.720] So anyway, so Fediment by Design is much more private, it works using kind of an old technology

[24:25.720 --> 24:31.640] called eCash and using blind signatures, which I don't know if we want to get to that, that's

[24:31.640 --> 24:38.600] kind of old stuff, that blind signature makes it so that once you have your Bitcoin in the

[24:38.600 --> 24:45.920] Fediment any transactions you do with that are essentially anonymous and it would only

[24:45.920 --> 24:50.680] be dealing with anything on the blockchain if you were to withdraw from the Fediment.

[24:50.680 --> 24:53.800] Let me see if I have my head wrapped around this the correct way.

[24:53.800 --> 24:59.920] So it's a blockchain where three different people have to be hacked in order for a person

[24:59.920 --> 25:03.840] to actually get their hands on the Bitcoin or on the crypto.

[25:03.840 --> 25:09.960] And isn't the other hard part about that that would they know who the three people are inherently

[25:09.960 --> 25:13.280] by the blockchain or would that be part of the problem?

[25:13.280 --> 25:20.440] So the people who are the guardians, there could be, I think they said up to 15 and the

[25:20.440 --> 25:25.600] guardians could be anonymous or they could be public people.

[25:25.600 --> 25:31.720] The idea of the whole Fediment is it's supposed to be, whereas the base layer of Bitcoin is

[25:31.720 --> 25:33.720] meant to be completely trustless.

[25:33.720 --> 25:41.320] There's no like third party involved in really any of the process of Bitcoin, it's all completely

[25:41.320 --> 25:45.920] automated and that's how it's designed to be.

[25:45.920 --> 25:48.120] And so this is supposed to bring a little bit of trust into it.

[25:48.120 --> 25:53.960] So realistically, most of the guardians probably you would want to be a publicly known person

[25:53.960 --> 26:01.880] so that people would trust you and your small group to custody their Bitcoin for them.

[26:01.880 --> 26:07.960] But that person who was the guardian wouldn't know any information about the people who

[26:07.960 --> 26:14.400] were participating in the Fediment and they wouldn't have any information about what Bitcoin

[26:14.400 --> 26:17.160] is moving where or anything like that.

[26:17.160 --> 26:23.200] The other thing that is hitting me is that you also want those people would need to like

[26:23.200 --> 26:28.720] be there, you know, they would have to have a presence that isn't going to just go away

[26:28.720 --> 26:29.720] suddenly.

[26:29.720 --> 26:30.720] Right.

[26:30.720 --> 26:35.440] You know, if or would those three people be picked at random when a trade is happening?

[26:35.440 --> 26:37.340] Is it like permanent or random?

[26:37.340 --> 26:38.340] It's permanent.

[26:38.340 --> 26:43.720] So somebody would basically create or a small group of people would basically create a Fediment.

[26:43.720 --> 26:51.180] The idea is that it would be implemented on different scales, but their ideal scale that

[26:51.180 --> 26:55.640] they're trying to what they're trying to do is create this for community level.

[26:55.640 --> 26:58.080] It's almost comparable to like a community bank.

[26:58.080 --> 26:59.080] Oh, OK.

[26:59.080 --> 27:00.080] That makes sense.

[27:00.080 --> 27:01.080] Yeah.

[27:01.080 --> 27:08.240] So and the idea of having Bitcoin banks goes all the way back to 2010, basically this idea

[27:08.240 --> 27:15.340] that you would have a bank that was very similar to to a regular bank, but it would be based

[27:15.340 --> 27:16.340] on Bitcoin.

[27:16.340 --> 27:20.880] This is one of the first attempts to actually implement that, though.

[27:20.880 --> 27:25.800] As like a protocol, as opposed to being like, you know, most other solutions are just like

[27:25.800 --> 27:32.100] a company, an app, whereas this is more of a protocol layer solution where anybody could

[27:32.100 --> 27:33.100] use it.

[27:33.100 --> 27:37.120] It's not like, you know, it's not like proprietary app or something.

[27:37.120 --> 27:38.120] That sounds interesting.

[27:38.120 --> 27:44.360] I mean, it does sound like it solves the problem of basically having your Bitcoin stolen by

[27:44.360 --> 27:48.160] someone who creates a totally like temporary exchange.

[27:48.160 --> 27:53.400] I mean, it does it does seem to have more, you know, more of anonymous security built

[27:53.400 --> 27:56.880] into it in a sense, you know, like it does seem like it could do it.

[27:56.880 --> 28:01.320] But I mean, is it is it something that is is happening right now or is this is this

[28:01.320 --> 28:02.360] in the works?

[28:02.360 --> 28:07.600] They're hoping to have like to be able to like launch probably like a prototype or,

[28:07.600 --> 28:11.700] you know, maybe like a beta version or something around around then.

[28:11.700 --> 28:15.560] So it's not available right now, but should be available soon, hopefully.

[28:15.560 --> 28:17.100] Well, thanks, David.

Multivitamins for Memory (28:17)

- [link_URL Effects of cocoa extract and a multivitamin on cognitive function: A randomized clinical trial][5]

[28:17.100 --> 28:23.640] On the live stream this last Friday, somebody asked us about the recent study on multivitamins

[28:23.640 --> 28:27.880] and memory, which I hadn't done a deep dive on yet, and I said I would do it for the show

[28:27.880 --> 28:28.880] this week.

[28:28.880 --> 28:29.880] So here I am.

[28:29.880 --> 28:35.120] So the study is effects of cocoa extract and a multivitamin on cognitive function, a randomized

[28:35.120 --> 28:36.780] clinical trial.

[28:36.780 --> 28:44.520] This essentially was studying both a multivitamin and cocoa extract individually and together

[28:44.520 --> 28:45.680] versus placebo.

[28:45.680 --> 28:51.600] So there were four, you know, four groups in this trial was randomized and placebo controlled

[28:51.600 --> 28:52.780] and blinded.

[28:52.780 --> 28:58.080] And then they followed older subjects over three years.

[28:58.080 --> 29:02.540] It was a telephone evaluation, but you could do that because it was essentially just a

[29:02.540 --> 29:04.560] verbal mental status exam.

[29:04.560 --> 29:09.640] You know, they asked them to do verbal tasks, naming tasks, trail making, whatever.

[29:09.640 --> 29:13.960] Then they were these were standardized cognitive evaluations that you could be that can be

[29:13.960 --> 29:14.960] scored.

[29:14.960 --> 29:17.920] And then you can attach a number to it.

[29:17.920 --> 29:23.960] And the bottom line is that what they found is over those three years that taking a multivitamin

[29:23.960 --> 29:31.280] every day was associated with a greater improvement in performance on these cognitive tests than

[29:31.280 --> 29:34.780] was placebo or the cocoa extract.

[29:34.780 --> 29:39.800] The cocoa extract had zero effect, so there was no apparent benefit to that.

[29:39.800 --> 29:40.800] Now I say a greater.

[29:40.800 --> 29:44.520] Yeah, I say a greater increase because everyone improved, right?

[29:44.520 --> 29:47.040] I mean, every group improved.

[29:47.040 --> 29:49.600] And that's a well-known phenomenon.

[29:49.600 --> 29:53.480] Whenever you do a study like this, it's a practice effect, right?

[29:53.480 --> 29:56.640] The second time you do the set of standardized tests, you're going to be done.

[29:56.640 --> 29:57.640] You're going to do better.

[29:57.640 --> 29:59.600] The third time, you're going to do better.

[29:59.600 --> 30:01.040] And then it kind of plateaus.

[30:01.040 --> 30:04.600] So you can do this one of two ways.

[30:04.600 --> 30:11.840] You can give the test to your subjects until they plateau and then that's their baseline,

[30:11.840 --> 30:13.000] right?

[30:13.000 --> 30:18.520] Or you just have to compare it against the placebo and then you see who improves more,

[30:18.520 --> 30:19.520] right?

[30:19.520 --> 30:21.000] Is there a difference or not?

[30:21.000 --> 30:22.000] So that's what they chose to do.

[30:22.000 --> 30:29.920] They did not establish, they didn't do a practice series to get them to their plateau first.

[30:29.920 --> 30:33.480] So a few things to put this into perspective.

[30:33.480 --> 30:36.720] First of all, this is not the first study to look at the effects, the correlation between

[30:36.720 --> 30:42.560] taking a multivitamin and cognitive function in older patients or in patients in general

[30:42.560 --> 30:43.560] subjects.

[30:43.560 --> 30:48.320] There's been, you know, decades of research into this with pretty mixed results.

[30:48.320 --> 30:52.200] Like there's no consistent effect here, no huge effect here.

[30:52.200 --> 30:55.920] And the general interpretation of all the research has been, yeah, there's just no,

[30:55.920 --> 31:00.120] nothing that you can point to that's clearly demonstrated.

[31:00.120 --> 31:05.760] The reason the results are mixed is probably because there isn't a huge effect here.

[31:05.760 --> 31:10.440] But you know, the researchers took all the previous research into consideration.

[31:10.440 --> 31:14.920] They wanted to do a larger study with, that's more, that's with a longer follow-up.

[31:14.920 --> 31:18.920] Most studies were only like six months or a year, so they did three years and et cetera.

[31:18.920 --> 31:23.360] Basically just do a bigger, better, longer study to see if they could squeeze out a statistically

[31:23.360 --> 31:25.560] significant effect that way.

[31:25.560 --> 31:26.560] And they did.

[31:26.560 --> 31:27.560] So what does that mean?

[31:27.560 --> 31:31.760] So we need to, again, statistical significance, as I've said many, many times on this show,

[31:31.760 --> 31:37.440] is not the only thing to look at when evaluating the clinical significance of a study of a

[31:37.440 --> 31:38.980] medical trial.

[31:38.980 --> 31:44.180] So you have to also look at the clinical significance of the difference, right?

[31:44.180 --> 31:47.560] So what, how much of an effect size is there here?

[31:47.560 --> 31:52.940] And the bottom line is that the overall effect size was fairly small, right?

[31:52.940 --> 31:59.120] It was less than the amount that everybody improved just from the study effect, right?

[31:59.120 --> 32:01.200] Just from the practice effect.

[32:01.200 --> 32:04.700] So it was pretty small, but it was statistically significant.

[32:04.700 --> 32:07.520] And then the other question is, well, what could be going on here?

[32:07.520 --> 32:12.200] So first of all, this could be a spurious effect and as the researchers acknowledge,

[32:12.200 --> 32:18.480] we need to do this study with more individuals and with a more diverse population and we

[32:18.480 --> 32:21.600] need to gather more data to see like, is this real?

[32:21.600 --> 32:25.500] And if so, what are the, what's the probable mechanism?

[32:25.500 --> 32:33.520] For me, the big glaring omission in this test was that they did not test vitamin levels

[32:33.520 --> 32:41.660] before doing the study, because let's say older patients, older people do tend to have

[32:41.660 --> 32:45.380] lower vitamin B12 levels, for example, cause that's a hard vitamin to absorb.

[32:45.380 --> 32:48.040] You need a special molecule to bind to it.

[32:48.040 --> 32:53.400] It's called intrinsic factor and then sort of usher it over, you know, the gastric membrane.

[32:53.400 --> 32:58.000] So it doesn't just get passively absorbed and you know, that, that can decrease as we

[32:58.000 --> 33:02.480] age and people could become B12 deficient as we get older.

[33:02.480 --> 33:05.600] Pretty much I check it in every single patient that I have, cause it's just that one of,

[33:05.600 --> 33:10.120] it's a basic neurology lab that we do because it affects neurological functioning.

[33:10.120 --> 33:16.960] And you know, it's, it's a pretty high incidence of B12, either insufficiency or deficiency

[33:16.960 --> 33:23.620] in the population being studied here and you know, multivitamins typically contain B12.

[33:23.620 --> 33:32.200] So how do they know they're not just treating undiagnosed B12 deficiency in this population,

[33:32.200 --> 33:35.920] which has a measure, which is, has a known benefit to cognition, right?

[33:35.920 --> 33:40.760] As a known benefit in terms of dementia, you know, B12 deficiency contributes to dementia

[33:40.760 --> 33:44.320] and can cause it by itself if it's bad enough for long enough.

[33:44.320 --> 33:47.920] But so anyway, that seemed like a pretty big omission to me.

[33:47.920 --> 33:51.760] And I think that definitely a follow-up study would do that because that might, you know,

[33:51.760 --> 33:57.240] treating otherwise undiagnosed deficiency could be the entire explanation here, could

[33:57.240 --> 33:58.240] be the entire effect.

[33:58.240 --> 34:02.760] For the vitamin industry, isn't that also kind of their argument?

[34:02.760 --> 34:07.280] The there's an important difference though, between targeted supplementation and routine

[34:07.280 --> 34:10.040] multivitamin supplementation.

[34:10.040 --> 34:16.180] And there is known harm, at least, you know, correlations between routine multivitamin

[34:16.180 --> 34:20.600] use and, and other, you know, health, negative health outcomes like heart disease.

[34:20.600 --> 34:21.600] Right.

[34:21.600 --> 34:22.880] Cause you're taking too much of something.

[34:22.880 --> 34:23.880] Yeah.

[34:23.880 --> 34:27.800] And I'll tell you just from again, having tested vitamin levels on hundreds and hundreds

[34:27.800 --> 34:33.360] of patients of all ages, but certainly many of them older, um, you know, we diagnose levels

[34:33.360 --> 34:38.100] that are, that are too high as frequently as we diagnose levels that are too low.

[34:38.100 --> 34:42.200] And so often I'm telling my patients, stop this, stop that, and add this, you know what

[34:42.200 --> 34:43.200] I mean?

[34:43.200 --> 34:44.840] Like I'm, I have to direct their supplementation.

[34:44.840 --> 34:48.560] They're taking too much of certain things and not enough of other things.

[34:48.560 --> 34:49.560] That's very, very common.

[34:49.560 --> 34:56.840] So just, you know, a blanket multivitamin without any pre-testing of vitamin levels,

[34:56.840 --> 34:58.160] I don't think is the right approach.

[34:58.160 --> 35:02.280] I don't think the evidence supports that, but the, you know, the, the, the, all the

[35:02.280 --> 35:05.780] other evidence, if you look at it in its totality, it does support, there's a lot of instances

[35:05.780 --> 35:11.560] where targeted supplementation is proven to be effective and is, is good, you know, medical

[35:11.560 --> 35:16.520] management, but routine multivitamin supplementation really isn't.

[35:16.520 --> 35:21.000] And this study does not really answer that question because they didn't test vitamin

[35:21.000 --> 35:22.000] levels.

[35:22.000 --> 35:26.720] So, um, they didn't make the key distinction in my part, in my, in my opinion.

[35:26.720 --> 35:31.480] And of course a good scientific study should, but I guess for the devil's advocate thing

[35:31.480 --> 35:36.640] that I'm asking, just trying to kind of channel the people who take multivitamins regularly

[35:36.640 --> 35:43.280] is, is there a, is there a benefit to a certain percent of the population who you know are

[35:43.280 --> 35:48.120] not going to be going in for this routine testing, who you know are very likely not

[35:48.120 --> 35:54.880] on top of their levels, a just in case multivitamin, it just, does the good outweigh the harm?

[35:54.880 --> 35:58.520] So again, if you look at, you can't answer that question from looking at this study,

[35:58.520 --> 36:02.280] but because it's only looking at a certain number of things, but if you look at the totality

[36:02.280 --> 36:06.800] of the research into multivitamins, there seems to be a net negative, if anything, you

[36:06.800 --> 36:10.680] know, correlation with, with just taking a routine multivitamin.

[36:10.680 --> 36:13.580] Here's here's one reason why that could be a bad thing.

[36:13.580 --> 36:18.400] If you're in your sixties, you know, let's say like for seventies, the target population

[36:18.400 --> 36:22.220] in this study, you should be seeing your primary care doctor at least once a year.

[36:22.220 --> 36:26.000] So if you take a multivitamin and go, I don't have to see my primary care doctor, I'm taking

[36:26.000 --> 36:29.720] a multivitamin, that's, I'm covered, you know, that actually can have an unintended

[36:29.720 --> 36:31.520] negative consequence.

[36:31.520 --> 36:35.160] But it's hard to know how that all shakes out, you know, because there's, there's multiple

[36:35.160 --> 36:38.880] possible unintended consequences here.

[36:38.880 --> 36:42.600] But the thing is you should be seeing your primary care doctor at least annually.

[36:42.600 --> 36:47.540] And if you do, I guarantee you that they're checking your B12 level and other, and other

[36:47.540 --> 36:48.680] vitamin levels as well.

[36:48.680 --> 36:51.600] And if you certainly, if you have any neurological symptoms, you're going to get pretty much

[36:51.600 --> 36:56.080] a full metabolic screen, you know, nutritional screen, you should anyway.

[36:56.080 --> 37:01.560] And then you'll be able to take personalized, you know, targeted supplementation.

[37:01.560 --> 37:08.000] And so, you know, there, there may be negative unintended consequences here that are not

[37:08.000 --> 37:11.680] being picked up by this study, which is mainly concerned with cognitive function.

[37:11.680 --> 37:12.680] Yeah.

[37:12.680 --> 37:15.720] And by the way, there's another consideration here and I'll use a personal anecdote.

[37:15.720 --> 37:21.400] I, you know, did have B12 testing when I was struggling, have been struggling with some,

[37:21.400 --> 37:28.960] some specific symptoms and my physician recommended, and same thing actually with iron.

[37:28.960 --> 37:34.440] So I was iron deficient and B12 deficient and sure, I could have like done targeted

[37:34.440 --> 37:38.480] supplementation by going down the vitamin aisle, but these are like not FDA approved

[37:38.480 --> 37:40.120] and I don't really know what's in them.

[37:40.120 --> 37:43.260] And you know, this is, they're not regulated very well.

[37:43.260 --> 37:46.820] And ultimately I had some issues with absorption, like with like gut problems from trying to

[37:46.820 --> 37:48.240] take a oral iron.

[37:48.240 --> 37:54.640] So whatever I was able to do B12 injections and I ended up having to get iron infusion.

[37:54.640 --> 37:59.400] So now I'm getting prescription medication that I know is FDA approved.

[37:59.400 --> 38:02.880] I know it's made in a lab, it's clean, it's been tested.

[38:02.880 --> 38:05.640] And the same is true of many oral vitamins as well.

[38:05.640 --> 38:12.920] And, and I write prescriptions for vitamins to my patients so they know exactly what they're

[38:12.920 --> 38:15.120] getting and what dose and everything.

[38:15.120 --> 38:16.280] And that's the other thing.

[38:16.280 --> 38:21.840] If I'm prescribing B12 supplements to a patient who has B12 deficiency, who's whatever in

[38:21.840 --> 38:26.400] their sixties or seventies, I then have to check followup levels because they may not

[38:26.400 --> 38:27.760] be absorbing the B12.

[38:27.760 --> 38:28.760] It may not work.

[38:28.760 --> 38:29.760] You know, oral B12 may not.

[38:29.760 --> 38:30.760] That's what happened to me.

[38:30.760 --> 38:31.760] Yeah.

[38:31.760 --> 38:32.760] And then you have, then you have to get the injections.

[38:32.760 --> 38:35.200] You have to bypass the gut and do the injections.

[38:35.200 --> 38:42.160] So again, just taking a multivitamin may not be addressing the actual, the actual problem.

[38:42.160 --> 38:46.720] And if people are doing that, instead of getting their levels checked, that could have a net

[38:46.720 --> 38:47.720] negative level.

[38:47.720 --> 38:51.520] So you have to compare it to, you know, like best practices also.

[38:51.520 --> 38:52.520] Yeah.

[38:52.520 --> 38:56.920] It still could be true that, you know, there's an intention to treat analysis here, although

[38:56.920 --> 39:00.360] I will say that there was about a 10% dropout, which they didn't count.

[39:00.360 --> 39:03.680] And you know, that was probably enough people to affect the outcome of the study because

[39:03.680 --> 39:06.520] the effect sizes were not that huge.

[39:06.520 --> 39:10.040] And you have to wonder why that, you know, there was that dropout and what did, what,

[39:10.040 --> 39:11.440] what was going on with those individuals.

[39:11.440 --> 39:12.440] Right.

[39:12.440 --> 39:13.440] Did that bias the outcome?

[39:13.440 --> 39:14.440] Yeah.

[39:14.440 --> 39:15.440] Right.

[39:15.440 --> 39:16.440] Exactly.

[39:16.440 --> 39:17.520] You know, that's, that's why you have to disclose the dropout rate.

[39:17.520 --> 39:19.800] But in any case, yeah, I mean, the thing is, yeah, sure.

[39:19.800 --> 39:24.040] Some people in the study were probably helped by taking, by taking a multivitamin.

[39:24.040 --> 39:25.040] That's true.

[39:25.040 --> 39:30.280] But you know, we definitely, my concern is that because there's already a huge cultural

[39:30.280 --> 39:35.760] impetus to just take a multivitamin just in case, that if people get the bottom line message

[39:35.760 --> 39:39.560] from this, that taking the multivitamin is good and that's all they have to worry about,

[39:39.560 --> 39:41.800] that could have a net negative effect.

[39:41.800 --> 39:45.640] And that we really do want to get the message out that, you know, vitamins are a medical

[39:45.640 --> 39:46.640] intervention.

[39:46.640 --> 39:50.200] You know, you do need to have your levels checked, especially when you get older, especially

[39:50.200 --> 39:55.800] if you have certain medical concerns or symptoms and things need to be done in an evidence

[39:55.800 --> 39:58.120] based way, not just shooting from the hip.

[39:58.120 --> 39:59.120] Take a multivitamin.

[39:59.120 --> 40:00.120] Don't worry about it.

[40:00.120 --> 40:01.120] Right.

[40:01.120 --> 40:04.080] Like you wouldn't take an, well, maybe aspirin is different, but I wouldn't take like a

[40:04.080 --> 40:08.280] full dose of ibuprofen every day just in case I'm going to get a headache.

[40:08.280 --> 40:12.040] That's dangerous.

[40:12.040 --> 40:16.480] The dose ranges are pretty broad for vitamins, but you know, but we do see vitamin toxicity

[40:16.480 --> 40:17.480] does happen.

[40:17.480 --> 40:18.480] Yeah.

[40:18.480 --> 40:20.320] Because you don't know what else, you don't know what else they're, they're having their

[40:20.320 --> 40:23.000] regular diet, what else they're consuming.

[40:23.000 --> 40:26.080] And sometimes when people are really doing it, like for these health, they're taking

[40:26.080 --> 40:30.640] like thousands, the plural percent of like the recommended daily intake.

[40:30.640 --> 40:32.840] Well, the other thing is, yeah, they might be taking too much.

[40:32.840 --> 40:33.840] They don't know what dose to take.

[40:33.840 --> 40:35.240] The other thing, or they might be taking too little.

[40:35.240 --> 40:36.760] That's the other thing.

[40:36.760 --> 40:42.480] There are, like I've had patients who've had like, you know, vitamin B6 levels that are

[40:42.480 --> 40:47.800] 10 times the upper limit of normal, like really super high levels.

[40:47.800 --> 40:51.720] And they tell me that they don't know that they're not supplementing.

[40:51.720 --> 40:57.120] The thing is there's so much embedded supplementation in, you know, food.

[40:57.120 --> 40:58.600] Oh, like fortified foods.

[40:58.600 --> 41:03.480] Fortified, like are you drinking vitamin water or whatever, the cereal, whatever, that they're,

[41:03.480 --> 41:08.360] they're might be getting too much of certain vitamins without specifically taking a multivitamin

[41:08.360 --> 41:10.560] or specifically supplementing.

[41:10.560 --> 41:14.040] So again, it's why it's good to sort of have a conversation with them about like what they're

[41:14.040 --> 41:17.840] eating and what they're not eating and then, and what their levels are and, and again,

[41:17.840 --> 41:23.080] try to give them some specific personalized advice about their diet as well as, you know,

[41:23.080 --> 41:26.000] what may or may not need to be supplemented.

[41:26.000 --> 41:28.320] That's the direction I definitely would like to see things go.

[41:28.320 --> 41:31.640] And that's, you know, I think neurology is there for the things that we are concerned

[41:31.640 --> 41:36.440] about because, you know, actual deficiencies in the, in these vitamins can cause neurological

[41:36.440 --> 41:40.680] symptoms and dementia being a big one, you know, so, you know, we're kind of already

[41:40.680 --> 41:41.840] all over that.

[41:41.840 --> 41:49.400] But anyway, it's and again, this is one study embedded in a very, you know, decades of research

[41:49.400 --> 41:51.960] showing results kind of all over the place.

[41:51.960 --> 41:56.840] So we can't, you know, look at this as if this is conclusive, you know, we definitely,

[41:56.840 --> 42:01.480] I would definitely like to see, you know, better controlled, more thorough, larger studies

[42:01.480 --> 42:05.280] with more diverse population and, you know, see, and see if there's a consistent effect

[42:05.280 --> 42:06.280] here.

Refreezing the Poles (42:07)

[42:06.280 --> 42:07.280] All right, Bob.

[42:07.280 --> 42:08.280] Yes.

[42:08.280 --> 42:10.300] I mean, is this serious?

[42:10.300 --> 42:15.000] They're talking about refreezing the poles if they melt.

[42:15.000 --> 42:16.000] Yeah.

[42:16.000 --> 42:17.080] Check this, check this out.

[42:17.080 --> 42:20.360] So this is geoengineering in the news.

[42:20.360 --> 42:24.840] This time it's a new study that's determined that deploying aerosols once a year, just

[42:24.840 --> 42:29.960] at the poles is not only feasible with current technology levels, but could also potentially

[42:29.960 --> 42:34.440] reverse the alarming ice melt that we are seeing there.

[42:34.440 --> 42:39.120] This was published recently in Environmental Research Communications, lead author, Wake

[42:39.120 --> 42:44.240] Smith, Steve's buddy at Yale, he's a lecturer at Yale, and senior fellow at the Harvard

[42:44.240 --> 42:45.560] Kennedy School.

[42:45.560 --> 42:50.160] So all right, so we're all aware of how climate change is basically like in our face now,

[42:50.160 --> 42:51.160] right?

[42:51.160 --> 42:55.440] It's like, no one's really saying anymore, not much anyway, that is that because of climate

[42:55.440 --> 42:56.440] change?

[42:56.440 --> 43:00.960] The answer is pretty yes, for a lot of this stuff that we're seeing.

[43:00.960 --> 43:03.480] And it's only going to get worse.

[43:03.480 --> 43:09.520] And so it's worth stressing that the poles of the Earth have been especially and worryingly

[43:09.520 --> 43:13.480] impacted above and beyond the global average.

[43:13.480 --> 43:16.600] So I mean, look at the heat waves this past year at both poles.

[43:16.600 --> 43:18.720] They're breaking all previous records.

[43:18.720 --> 43:24.560] But the Arctic has been hit even harder, the hardest of the harder of the two, warming

[43:24.560 --> 43:27.200] at twice the global average.

[43:27.200 --> 43:29.840] And this is due to what's called the Arctic amplification.

[43:29.840 --> 43:32.560] And this has multiple causes.

[43:32.560 --> 43:37.920] One is that the reduction in snow and sea ice albedo, that's albedo albedo, albedo.

[43:37.920 --> 43:44.480] So it's a reduction in snow and sea ice coefficient of reflectivity.

[43:44.480 --> 43:48.760] Increased heating due to increasing Arctic cloud cover and water vapor content, that's

[43:48.760 --> 43:50.800] also adding to the amplification.

[43:50.800 --> 43:55.680] And there's also more energy flowing from the lower latitudes to the Arctic for various

[43:55.680 --> 43:56.680] reasons.

[43:56.680 --> 44:00.440] And finally, there's more soot and black carbon aerosols in the atmosphere.

[44:00.440 --> 44:03.100] And that's causing even more heat to be absorbed.

[44:03.100 --> 44:08.360] So all of this is kind of like getting together into like a trifecta of amplification in the

[44:08.360 --> 44:11.320] Arctic that's making it as bad as we're seeing it there.

[44:11.320 --> 44:12.320] So here's an example.

[44:12.320 --> 44:18.200] Did you guys know that the Arctic annual mean surface temperature from 71 to 2019 has already

[44:18.200 --> 44:20.920] increased over three degrees Celsius?

[44:20.920 --> 44:25.400] Already from 1971 to 2019, wow.

[44:25.400 --> 44:26.400] So now what?

[44:26.400 --> 44:30.000] Now the Antarctic is not as dramatically bad.

[44:30.000 --> 44:36.620] But I mean, there's also the melting Antarctic ice sheet, which I think is very scary.

[44:36.620 --> 44:38.840] That could very well be a climate change tipping point.

[44:38.840 --> 44:40.360] A lot of people are talking about it.

[44:40.360 --> 44:44.200] A lot of the news coming from there is a little scary.

[44:44.200 --> 44:47.680] So with all that as the foundation, put that in your head.

[44:47.680 --> 44:52.640] So let's look at the type of geoengineering that was evaluated in this recent study that

[44:52.640 --> 44:53.640] I'm going to talk about.

[44:53.640 --> 45:00.200] So it's called SAI for Stratospheric Aerosol Injection, which is designed to increase Earth's

[45:00.200 --> 45:07.240] albedo albedo, or how much light it reflects, which would reduce the amount of global warming,

[45:07.240 --> 45:08.240] right?

[45:08.240 --> 45:09.920] Very, very simple at that level.

[45:09.920 --> 45:14.400] Now the researchers stress appropriately that this type of geoengineering is a bandaid.

[45:14.400 --> 45:19.080] It's part of an overall strategy that should include other climate strategies like mitigation,

[45:19.080 --> 45:21.480] adaptation, and carbon dioxide removal.

[45:21.480 --> 45:23.320] Those are the big boys.

[45:23.320 --> 45:24.320] That's what you want.

[45:24.320 --> 45:25.320] So this is just a bandaid.

[45:25.320 --> 45:30.280] This is something that could help delay things basically until we get our shit together.

[45:30.280 --> 45:35.840] Now if you've been following stratospheric aerosol injection, as I know we all have,

[45:35.840 --> 45:37.680] you know how controversial it is, right?

[45:37.680 --> 45:39.680] It's very controversial.

[45:39.680 --> 45:40.680] Think about it.

[45:40.680 --> 45:44.960] Spread deployment of chemicals into the upper atmosphere all over the globe.

[45:44.960 --> 45:49.840] Oh gosh, the chemtrail nutters are coming out of the woodwork for this one.

[45:49.840 --> 45:52.640] But it would seriously help cool down the Earth.

[45:52.640 --> 45:54.360] I mean, there's pretty much no doubt about that.

[45:54.360 --> 45:55.360] I mean, it's simple.

[45:55.360 --> 45:59.000] Wait, wait, Bob, you're jumping right to this is going to work?

[45:59.000 --> 46:00.000] Like, no, no, no.

[46:00.000 --> 46:01.000] I'm saying-

[46:01.000 --> 46:02.000] No, but like what are the unintended consequences?

[46:02.000 --> 46:03.000] Oh my God.

[46:03.000 --> 46:04.000] Yes.

[46:04.000 --> 46:07.680] I'm talking that in general, stratospheric aerosol injection will cool down the Earth.

[46:07.680 --> 46:09.120] That's not in question.

[46:09.120 --> 46:13.120] Isn't that the premise of Snowpiercer, that movie and TV show?

[46:13.120 --> 46:17.320] They inject the aerosols and they cool the Earth like minus 160 degrees.

[46:17.320 --> 46:18.320] Oh boy.

[46:18.320 --> 46:19.320] That was deliberate?

[46:19.320 --> 46:20.320] Oh my gosh.

[46:20.320 --> 46:21.320] Yeah.

[46:21.320 --> 46:27.040] Is that aerosol just like vaporized water or is it some other-

[46:27.040 --> 46:31.760] It's this various chemicals that could be used like sulfur dioxide.

[46:31.760 --> 46:32.760] So-

[46:32.760 --> 46:33.760] Yeah, it's not just water.

[46:33.760 --> 46:34.760] Yeah.

[46:34.760 --> 46:40.200] So, I was saying there's very little controversy that it would help cool the Earth, but the

[46:40.200 --> 46:43.080] unwanted side effects and the expense are the huge things.

[46:43.080 --> 46:48.240] I mean, we can get side effects that could be worse than the climate change itself.

[46:48.240 --> 46:50.040] And I mean, it could be horrific.

[46:50.040 --> 46:54.240] So, I mean, this is really not what this study was about.

[46:54.240 --> 46:58.960] I mean, we're not even close to understanding the impact to the people, to the environment.

[46:58.960 --> 47:00.480] So yeah.

[47:00.480 --> 47:05.520] And then don't forget, there's also the money it would cost for such a global geoengineering

[47:05.520 --> 47:07.320] is off the hook.

[47:07.320 --> 47:10.800] And even the technology to do it, we're not even there.

[47:10.800 --> 47:14.440] So as you can probably tell, there's a big but right here.

[47:14.440 --> 47:18.480] Now Jay, say it with me on four, one, two, three, but-

[47:18.480 --> 47:19.480] Sexual innuendo.

[47:19.480 --> 47:20.480] What?

[47:20.480 --> 47:21.480] All right.

[47:21.480 --> 47:22.480] It's close.

[47:22.480 --> 47:23.480] It's close.

[47:23.480 --> 47:31.520] But most of the research and studies and simulations and speculation of stratospheric aerosol injection

[47:31.520 --> 47:37.200] has all been about global solar geoengineering, deploying aerosols globally in order to lower

[47:37.200 --> 47:38.540] temperatures worldwide.

[47:38.540 --> 47:42.160] That's what, if people are talking about it and you're reading about it, chances are that's

[47:42.160 --> 47:45.840] what they're talking about, global insertion.

[47:45.840 --> 47:50.120] And that's not what this paper is about.

[47:50.120 --> 47:54.760] There's only actually been a few studies dealing with a more limited version of this called

[47:54.760 --> 47:56.480] subpolar geoengineering.

[47:56.480 --> 47:59.000] And this is what the study is about.

[47:59.000 --> 48:06.080] Now this flavor of SAI has geographically limited deployments, in this case, 60 degrees

[48:06.080 --> 48:09.680] north and south, subpolar region of the poles.

[48:09.680 --> 48:14.520] Now to right off the bat, if you know about this, this strategy has some interesting potential

[48:14.520 --> 48:15.520] benefits.

[48:15.520 --> 48:19.080] First off, it's technically easier to pull off because of the poles, the troposphere

[48:19.080 --> 48:25.040] is lower, which means that you could insert the aerosols at a lower altitude than anywhere

[48:25.040 --> 48:26.680] else on the planet.

[48:26.680 --> 48:27.680] So that's huge.

[48:27.680 --> 48:29.040] That is absolutely huge.

[48:29.040 --> 48:31.080] You don't have to go nearly as high.

[48:31.080 --> 48:37.280] Getting a plane filled with these aerosols at such incredible altitude is really hard.

[48:37.280 --> 48:41.680] And so this is a big bonus for these subpolar insertions.

[48:41.680 --> 48:46.240] And the other benefit is that there have been some of these polar studies and they have

[48:46.240 --> 48:52.000] showed that an Arctic deployment would be better at preserving sea ice than the global

[48:52.000 --> 48:53.380] equatorial injection.

[48:53.380 --> 48:55.240] So that's big as well.

[48:55.240 --> 48:56.240] Okay.

[48:56.240 --> 49:01.400] So the lead author, Wade Wake-Smith says, there's widespread and sensible trepidation

[49:01.400 --> 49:04.240] about deploying aerosols to cool the planet.

[49:04.240 --> 49:09.440] But if the risk benefit equation were to pay off anywhere, it would be at the poles.

[49:09.440 --> 49:12.800] So it was like, if this technique is going to work at all, this is where it's going to

[49:12.800 --> 49:13.800] work.

[49:13.800 --> 49:15.140] Now I recommend reading the study.

[49:15.140 --> 49:17.920] It's actually a very, very accessible.

[49:17.920 --> 49:22.640] It's called a subpolar focused stratospheric aerosol injection deployment scenario.

[49:22.640 --> 49:28.760] So now it takes a deep dive, as you might imagine, into this whole idea of subpolar

[49:28.760 --> 49:33.800] geoengineering and what it would actually be like, what would it involve.

[49:33.800 --> 49:35.180] So I'll cut to the chase.

[49:35.180 --> 49:40.320] The paper's conclusion says substantially cooling the world's polar and subpolar regions

[49:40.320 --> 49:42.400] would be logically feasible.

[49:42.400 --> 49:47.360] So in this study, they've determined that it is absolutely logically feasible to pull

[49:47.360 --> 49:48.360] this off.

[49:48.360 --> 49:55.060] I'll continue quoting, this could arrest and likely reverse the melting of sea ice, land

[49:55.060 --> 50:00.040] ice and permafrost in the most vulnerable regions of the earth's cryosphere.

[50:00.040 --> 50:02.380] The cryosphere is an awesome word.

[50:02.380 --> 50:06.800] It's just the parts of the earth that have frozen, that have ice, frozen water.

[50:06.800 --> 50:08.060] And then it ends here.

[50:08.060 --> 50:12.400] This in turn would substantially slow sea level rise globally.

[50:12.400 --> 50:16.120] So that to me, that's fantastic.

[50:16.120 --> 50:17.120] That's a wonderful result.

[50:17.120 --> 50:20.720] I couldn't really hope for too much more in the conclusion.

[50:20.720 --> 50:25.640] Their plan could reduce the average surface temperatures north of 60 degrees by a year

[50:25.640 --> 50:29.180] round average of two degrees Celsius.

[50:29.180 --> 50:35.060] That's basically could get it back to close to pre-industrial average temperatures.

[50:35.060 --> 50:36.100] Amazing reduction.

[50:36.100 --> 50:41.120] So some of the details are interesting, like to do that, they said that we would need 125

[50:41.120 --> 50:46.480] high altitude tankers that would have to be built because we can't really use hand me

[50:46.480 --> 50:49.920] down planes or planes that exist right now.

[50:49.920 --> 50:55.080] They're not good enough to get enough of the aerosol at high enough altitude in order to

[50:55.080 --> 50:56.120] pull this off.

[50:56.120 --> 51:01.200] So we would need to design and build these high altitude tankers, but it's totally doable.

[51:01.200 --> 51:04.920] This is not FTL, faster than light drive we're creating here.

[51:04.920 --> 51:09.840] There's just high altitude tankers, not that difficult in the grand scheme of things.

[51:09.840 --> 51:15.120] So they would release trillions of grams of aerosols like sulfur dioxide once per year